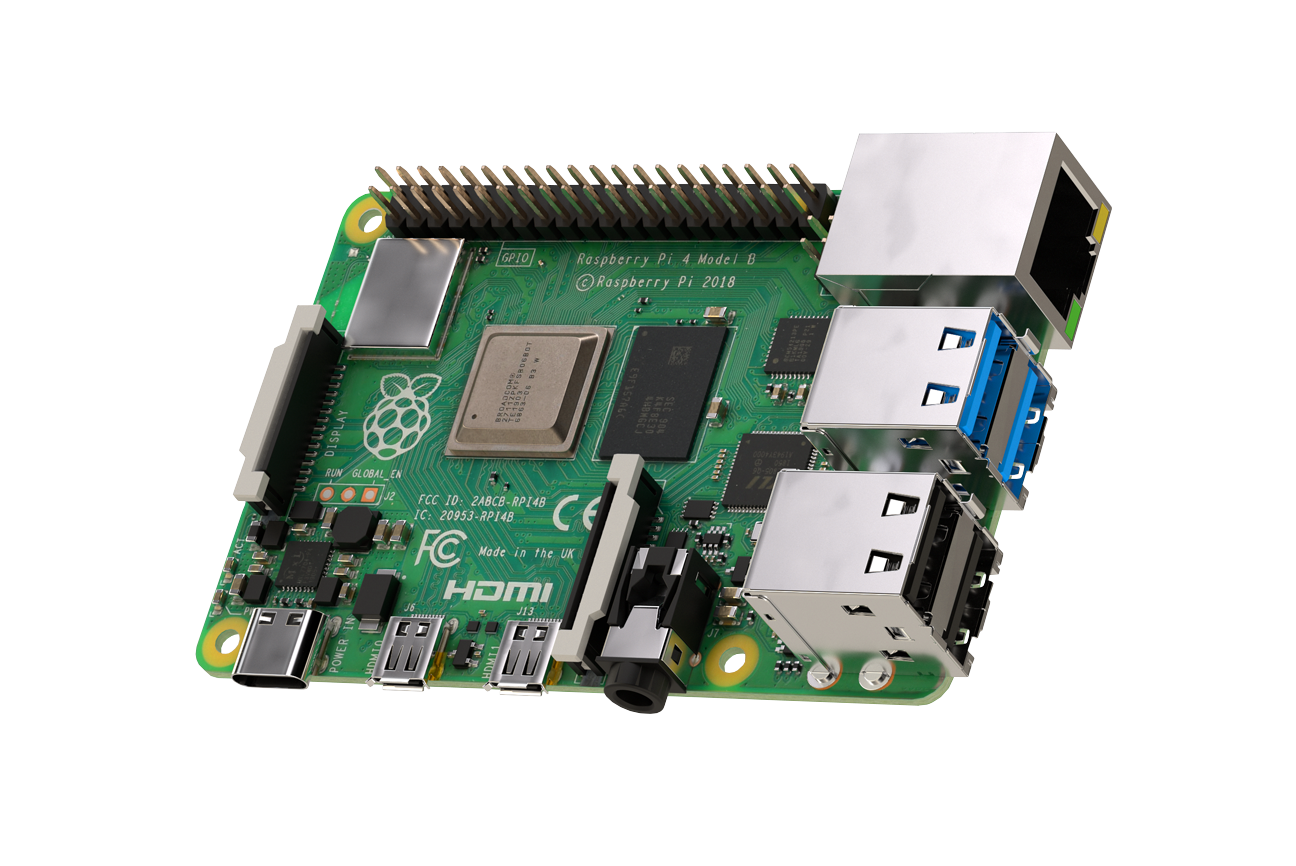

Prepare the PI4

Step1 : Get a « valid » 64bit system

You may be using PI4 with 8GB , and want to benefit the full available RAM.

For this you first need a stable working 64bit system.. at time I’m writting those lines I do recommand the ubuntu one (hypriot one is not ready, and raspoian one is still not upstream)

Go to the Ubuntu download page for Raspberry Pi images, and download the 64-bit version for Raspberry Pi 4 : Ubuntu Server 20.04.2 LTS

Step2 : Flash you sd card with the system

For this you can use Raspbery PI imager from https://www.raspberrypi.org/software/ . Just make sure to select your « Custom » image (the headless one you’ve downloaded before)

Step3 : Configure network your PI « node »

I’ve been using 4 PI on my side.

First to ensure a static IP is associated to each, I will let you play with your box DHCP setting to affect a given IP to all your PI. Once done you maye have something close to

IP | MAC | HOSTNAME -------------------------------------- 192.168.1.161 | dc:a6:xxx | node1 192.168.1.162 | dc:a6:yyy | node2 192.168.1.163 | dc:a6:zzz | node3 192.168.1.164 | dc:a6:uuu | node4

Then you need to configure a bit the network on the PI itself

#Use SSH to log on your PI > ssh ubuntu@192.168.1.xxx #Default pass is "ubuntu", you will be asked to change it > sudo vim /etc/hosts <= enter all node ip # You should have a section like # 192.168.1.161 node1 # 192.168.1.162 node2 # 192.168.1.163 node3 # 192.168.1.164 node4 > sudo vi /etc/hostname <= enter current hostname #On node1 you should have "node1" ... etc for node2 node3 node4

Then make sure you system is up-to date

> sudo apt update > sudo apt -y upgrade && sudo systemctl reboot

Configure your master (node1)

> sudo apt install apt-transport-https ca-certificates curl software-properties-common -y > curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -

Warning: k3s will requires you to activate the cgroup memory , otherwise later on you will have an error « Failed to find memory cgroup »

To avoid this just add following text at end of /boot/firmware/cmdline.txt : cgroup_memory=1 cgroup_enable=memory

> sudo vi /boot/firmware/cmdline.txt # Add cgroup_memory=1 cgroup_enable=memory at the end # On my side I've ended with a content # net.ifnames=0 dwc_otg.lpm_enable=0 console=serial0,115200 console=tty1 root=LABEL=writable rootfstype=ext4 elevator=deadline rootwait fixrtc cgroup_memory=1 cgroup_enable=memory > shutdown -r now # Note: After reboot you can check which command line boot option was used doing a > cat /proc/cmdline

Then install k3s using:

> curl -sfL https://get.k3s.io | sh -

Check everything is fine

> sudo kubectl get nodes

# Should display something close to

# NAME STATUS ROLES AGE VERSION

# node1 Ready control-plane,master 24h v1.20.2+k3s1

> systemctl status k3s.service

# Should not display error, following warning will not be a problem

# Cannot read number of physical cores

# Cannot read number of sockets correctly

> journalctl -xe

# Should not display error, following warning will not be a problem

# Cannot read number of physical cores

# Cannot read number of sockets correctly

> sudo kubectl get nodes -o jsonpath='{.items[].status.capacity.cpu}{"\n"}'

# Should gives (4) because you have (4) cores on you CPU

# This validation should remove the stress from above warning

Configure your slave (node2 , node3 , node4)

Do the exact same action as for master except the k3s install (i.e correct the /boot/firmware/cmdline.txt cgroup_memory stuff)

On the MASTER (node1): Extract the JOIN TOKEN

> sudo cat /var/lib/rancher/k3s/server/node-token # This gives something like # XXXXXXXXXXXXXXXXXXXXX::server:YYYYYYYYYY

On the SLAVE (node2 ,node3, node4) : Install K3S in join mode (replace node1 ip and the join token below)

> curl -sfL http://get.k3s.io | K3S_URL=https://<NODE1 IP>:6443 K3S_TOKEN=XXXXXXXXXXXXXXXXXXXXX::server:YYYYYYYYYY sh -

Check everything is fine

> sudo kubectl get nodes # Should display something close to # NAME STATUS ROLES AGE VERSION # node1 Ready control-plane,master 24h v1.20.2+k3s1 # node3 Ready <none> 24h v1.20.2+k3s1 # node2 Ready <none> 24h v1.20.2+k3s1 # node4 Ready <none> 24h v1.20.2+k3s1

Don’t be afraid about the <none> ROLE, this is normal (at least on the k3s version i’ve tested)

Install kubectl client on Windows (or on you Main computer)

This will ease your life, as you may want to perform kubectl entries without having first to ssh on your master 🙂

Step1 : Get the kube config from your master

# From your ssh console on master node1 > sudo cat /etc/rancher/k3s/k3s.yaml # Copy the content and paste it in a file of your Windows

Step2: Install kubectl on Windows

You can just download the installer from https://kubernetes.io/docs/tasks/tools/install-kubectl/

# Add kubectl.exe on your system PATH and run it one time > kubectl # Replace the C:\Users\.kube\config file content from what you got from step1 above # Replace the "server: https://127.0.0.1:6443" with the ip of your master node, for me "server: https://192.168.1.161:6443"

Check everything is fine

> kubectl get node # Should display something close to # NAME STATUS ROLES AGE VERSION # node1 Ready control-plane,master 24h v1.20.2+k3s1 # node3 Ready <none> 24h v1.20.2+k3s1 # node2 Ready <none> 24h v1.20.2+k3s1 # node4 Ready <none> 24h v1.20.2+k3s1

Install kubernetess dashboard

> kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-beta8/aio/deploy/recommended.yaml

Above will create kubernetes-dashboard namespace and install many object in it.

#After a while you can control everything loks fine > kubectl get po -n kubernetes-dashboard # NAME READY STATUS # dashboard-metrics-scraper-79c5968bdc-jrpqz 1/1 Running # kubernetes-dashboard-5ff474b78f-svh86 1/1 Running

You may want to access you dashbhoard from your Windows browser using

#[From your windows] > kubectl proxy # << Starting to serve on 127.0.0.1:8001

And you will see nothing !!!!!! (and this is normal)

--- apiVersion: v1 kind: ServiceAccount metadata: name: admin-user namespace: kubernetes-dashboard --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: admin-user roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: kind: ServiceAccount name: admin-user namespace: kubernetes-dashboard

> kubectl apply -f dashboard-admin.yaml > kubectl -n kubernetes-dashboard get secret # Above should then display a admin-user-token-xxxx secret > kubectl -n kubernetes-dashboard describe secret admin-user-token-xxxx # Extract the token XXXXXXXXXXXXXXXXXXX from above

and use the XXXXXXXXXXXXXXXXXXXX token